A cute robot assistant can prevent your bad habits

User's behavior can be recognized with computer vision and feed the input to a system dynamics model that describes the robot emotional state. Using the emotions to trigger reactions in the robot, I studied how our cute friend Cozmo could help treating bad habits.

Thanks to Dr. Chernova, I had the chance to beta test this cute little robot called Cozmo.

Cozmo has a very simple concept of an OLED display for his face that is able to express emotions from animation of his eyes. The guys at Anki gave us a basic API in python to be able to communicate with the robot and trigger some acctions, modify the behaviour and getting access to the camera and other sensors like accelerometer and gyro.

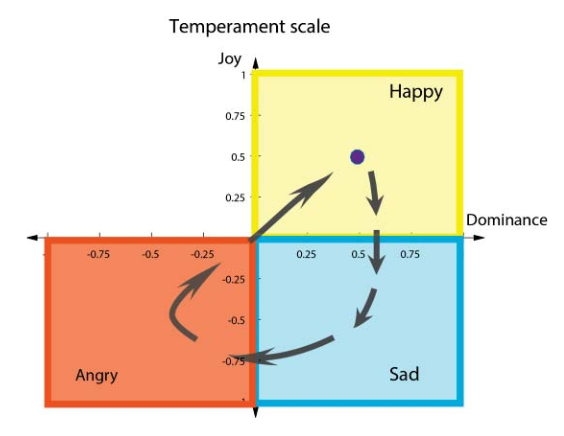

I wanted to explore an emotion based approach for driving the actions of the robot and how a robotic assistant could help to treat body focus repetitive behaviors. I defined a two axes temperament scale that defines the emotion of the robot according to the robot state in this x-y coordinate system.

Temperament scale. Feelings are encoded by R2 coordinate system of Joy and Dominance, the coordinates will elicit feelings in three categories Happy/Angry/Sad which elicit different action response from the robot.

I trained the robot to detect when a person is biting her nails and use that signal as the input to a system dynamics that drives the robot emotions. Then, using the emotions to trigger actions from the robot I studied how our cute friend Cozmo could help for treating the nail biting behavior. You can check out how this set up works in this video:

You can read more about this in the paper:

“Combined Strategy of Machine Vision with a Robotic Assistant for Nail Biting Prevention”